Hey folks,

As thanks to the players who participated in WCS Austin 2017 and produced a wonderful replay pack, I’ve put together a quick analysis of Time To First Action at that event.

Time To First Action is an attempt to quantify a player’s mechanical skill. It measures the time gap between a player moving their camera a substantial distance and subsequently taking their first action. The faster the player acts – in other words, processes visual information, decides on an action, and executes – the stronger their mechanics, at least in theory. I wrote a longer piece on the concept if you’re interested.

Before we go further, remember that this is a fun theorycrafting exercise. Don’t go off and flame someone because these numbers make them look slow.

Methodology

Based on the replay pack provided by Blizzard, I produced a time-to-first-action analysis of the players at WCS Austin. I grouped players by the number of game loops in-between a camera movement and an action. A game loop is a discrete unit of time in StarCraft, sort of like a clock tick. Actions in StarCraft are performed as turns – meaning, if you took two players and one executed every action 1 millisecond faster than the other, it generally wouldn’t make a difference since both players’ actions would end up in the same turn. StarCraft’s clock ticks 16 times an in-game second which, I believe, translates to ~22 times per real-life second.

(Technical details on turns in real time strategy games can be found here).

As with last time, I defined a “significant” camera movement as a camera update greater than 5000 units from the camera’s last location. For reference, a typical StarCraft II map is between 36,000 to 45,000 units across its X-axis and 36,000 to 45,000 units across its Y-axis.

Results

The top column is the median number of game loops in-between substantial camera movement and first action. Lower is better – it means the player reacted faster. Here’s the first batch:

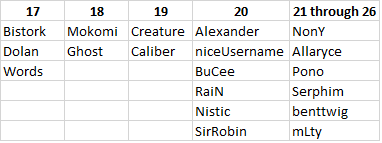

And here’s the second batch:

Predictive Capability

I ran a few different numbers here – the win rate of the player with higher APM and the win rate of the player with lower median time-to-first-action.

| Player Characteristic | Player Winrate |

| Higher APM | 64% |

| Lower Median TTFA | 63% |

Sadly, the hype doesn’t pan out this time around – higher APM is just as good a predictor as lower time-to-first-action.

Differences with a Regular Player

Because professionals are all mechanically strong, their time-to-first-action graphs look very similar. However, if you compare professionals with regular players, you’ll see a big difference. Below I’ve put together a graph: on the vertical axis you’ll see time-to-first-action in milliseconds, on the horizontal axis you’ll see percentiles. For instance, at the 70% mark on the horizontal axis, 70% of the players’ time-to-first-actions were at or below the number on the vertical axis.

I’ve selected three players: WCS Austin’s Finalists, Neeb and Nerchio, and High Diamond / low All-Time Legend, brownbear.

Conclusion

Time to First Action is a really interesting concept from a skills development perspective. We’re really fortunate that StarCraft’s replays make it easy for us to analyze.

If you enjoyed this post, I’d love it if you followed me on Twitter and Facebook and checked out my game-design-focused YouTube and Twitch channels. Thanks for reading and see you next time!

P.S. In terms of what’s next, I’m hard at work on my next StarCraft-related project, by far the largest piece of content I’ve ever made. It’ll be out in the next few months – stay tuned!

Update 5/13: Generating This Data

Hey folks,

A couple people have asked me how these numbers are calculated. I don’t release code for personal reasons, however the algorithm is straight-forward and easy to setup yourself.

I use Blizzard’s S2Protocol to read game events from replay files. S2Protocol supports a bunch of different commands, but I look at three:

- initdata and details — between the two of these, I can get basic information from a replay like who the players are, the map name, etc. The most important piece of information is the userId, which you need to figure out which game event matches to which player. To get the userId for a given playerName, you need to first get the playerName : workingSetSlotId from details, and then figure out the workingSetSlotId : userId in initdata.

- gameevents — this is the actual events that players executed in a game, like moving the camera or moving a unit. with the userId : player mapping from above you can do analysis on each individual player’s actions

- I didn’t bother looking at trackerevents but it looks like there is useful information in there.

Once I have a list of all the gameevents, I iterate through them. Here’s the rough pseudo-code:

for event in game_events:

if event.type == CameraUpdateEvent:

// Update last camera position

//

// Check to see if this was a significant camera movement.

// (Distance is calculated via pythagorean distance)

// If so, save the timestamp (game loop).

if event.type == CommandEvent:

// If we have a saved last significant camera movement,

// calculate time delta, add to time-to-first-actions list,

// and mark last significant camera movement as null.

Sadly, there’s no intelligence around filtering (e.g. removing the first two to three minutes of game play). Thanks to @KarlJayG for the suggestion – I’ll look into this for next time!

[…] first noticed SpeCIal when I was analyzing time-to-first-action metrics for WCS Austin. He was one of the fastest players in the tournament. Intrigued, I went back and ran […]

LikeLike